How To Forecast AGI

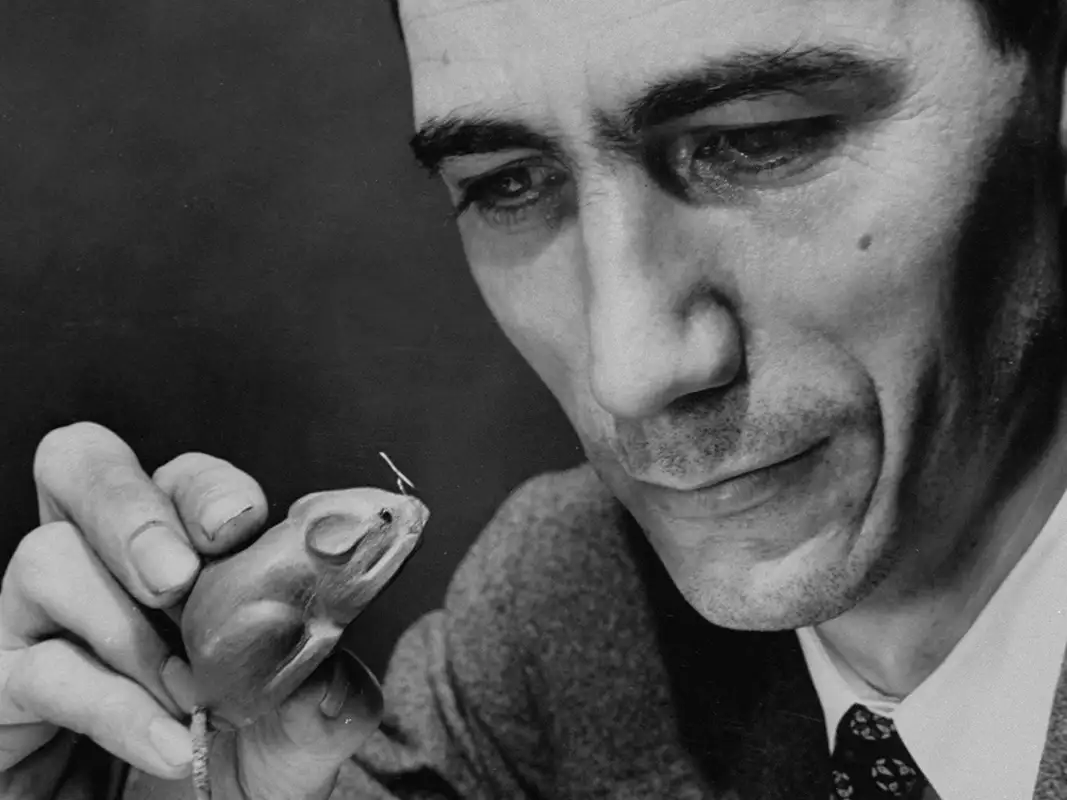

Andrej Karpathy is a software engineer best known for leading Tesla’s Autopilot team from 2017 to 2022. Before that, he taught Stanford’s CS231n course on convolutional neural networks, whose recorded lectures became widely watched in the early deep learning community. He was also a founding member of OpenAI, though his time there, from 2015 to 2017, came before its major advances with language models.

Karpathy recently appeared on Dwarkesh Patel’s podcast, where he once again generated buzz, something he is good at (coining “vibe coding" and what not). Although few people say it outright, most of the AI subculture assumes we’re within three to five years of achieving AGI. That’s the general undercurrent. So when Karpathy said he thinks we’re closer to ten years away, it landed as quite bearish, even if in absolute terms, five extra years isn’t much. Still, these increments matter, and I think his reasoning reflects some flawed assumptions about forecasting. That’s what I want to unpack here.

In the interview, the estimate he offers is largely intuitive, a gut call informed by his experience trying to make self-driving cars at Tesla. His argument is that we’ve seen impressive demos for years, but actually achieving robust performance has taken far longer than expected. This is true, but I don’t find the logic compelling.

With hindsight, the main reason self-driving cars took so long is that we simply didn’t have enough computing power for software to learn how to drive. When Karpathy started at Tesla, and even when he left, the available compute was nowhere near what was required.

Karpathy’s intuition is shaped by the engineering hardship he's lived through and all the unsolved problems he sees before us; the endless edge cases, brittle systems, and failure modes that plague real-world technology. But as Ray Kurzweil has argued for decades, that perspective can mislead even great engineers when they try to forecast the future. He calls it "the engineer's pessimism,” the tendency to focus on the problems you already understand and ignore the solutions you can’t yet imagine. We also tend to default to linear extrapolations, overlooking the recursive nature of progress; the way each generation of technology is used to produce the next.

Philip Tetlock's decades of research on forecasting reinforces this point. Across thousands of predictions made by hundreds of experts, he found that specialist knowledge rarely improved forecast accuracy. In fact, the more an expert knew about their domain, the more confident they became in predictions that turned out to be wrong. The very things that make someone an expert (deep immersion in a field, intimate familiarity with its obstacles, years spent solving its hardest problems) can actually distort their sense of what's possible. They see the thousand small difficulties that lie between here and there, but miss the larger forces that might sweep those difficulties aside.

Kurzweil takes a more meta approach that is simple and disciplined: estimate what becomes possible at given levels of compute, then project when that compute will exist. Grounded in the steady exponential growth of hardware, it proved far more reliable than most other forms of technological prediction. Kurzweil wasn’t flawless, but his framework gave him a clearer sense than most the pace and direction of technological progress.

In the late 1980s and early 1990s, long before most people had used email or heard of the web, Kurzweil described a near future where billions of people would carry powerful, networked computers in their pockets, capable of accessing all human knowledge and speaking naturally with users. He foresaw the rise of digital assistants, real-time translation, and the merging of phones, cameras, and computers into a single device, essentially describing the smartphone era. In the early 1990s, Kurzweil predicted that a computer would defeat a world chess champion by 1998, a milestone realized in 1997 when IBM’s Deep Blue beat Garry Kasparov. In 1999, using that same reasoning, he projected that computers would reach human-level intelligence by 2029—a bold prediction that now looks surprisingly plausible.

Karpathy’s work on self-driving cars was slow and ultimately became obsolete, not because the field couldn't cook up the right architecture, but because it began before the hardware could support the software. If you started building self-driving systems in 2025 instead of 2017, the experience would be very different. Their timing was off.

Ironically, this is exactly why Ray Kurzweil began forecasting in the first place. He realized that many brilliant engineers fail not because their ideas are unsound but because they start too early, before the ingredients were available to realize their vision. His goal was to time invention, to predict when the underlying compute would finally make certain technologies feasible.

A realistic forecast for AGI should rest on two main factors: first, whether it’s a problem within reach of normal human cognitive ability; and second, what amount of compute is needed and when will we have it.

Markets handle the rest. They are the coordination layer of collective intelligence, directing talent and capital wherever value lies. Once a problem becomes economically or strategically important, markets mobilize vast human and material resources to attack it.

Since ChatGPT, the flow of human talent and compute into AI has exploded. Hundreds of thousands of cutting-edge GPUs now run day and night, drawing megawatts of power to train ever larger models. The global technical elite have converged on a single goal: building machines that can think. Every major research lab, startup, and university department is attacking the same bottlenecks—memory, sample efficiency, continual learning, etc. Once the computing power is available, that much concentrated intelligence all but guarantees the obstacles will collapse under its weight...and the invention will arrive on time.

Therefore, forecasting AGI remains a question of how much compute and when will we have it. I'm not sure that updating our forecast in the fog of hype and doubt will do us much good. I think I'll stick to 2029.